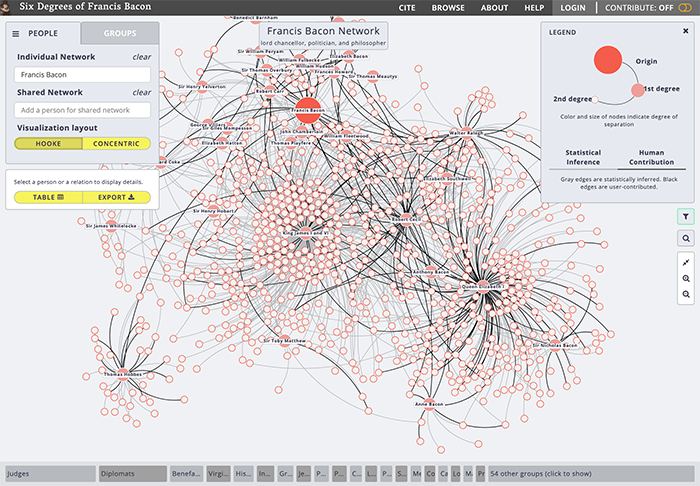

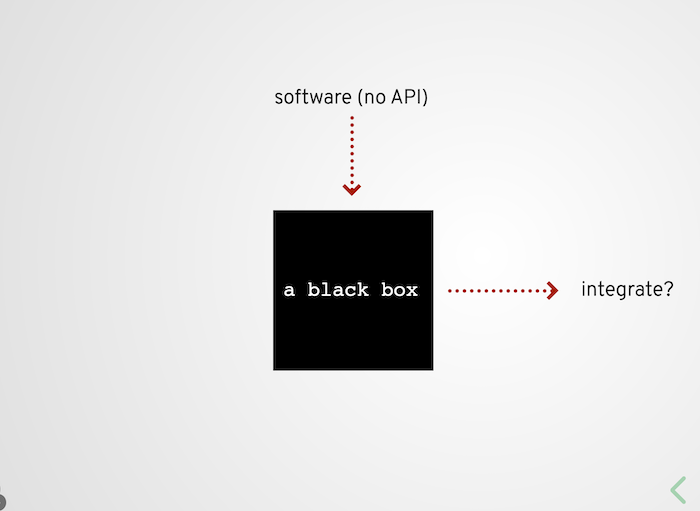

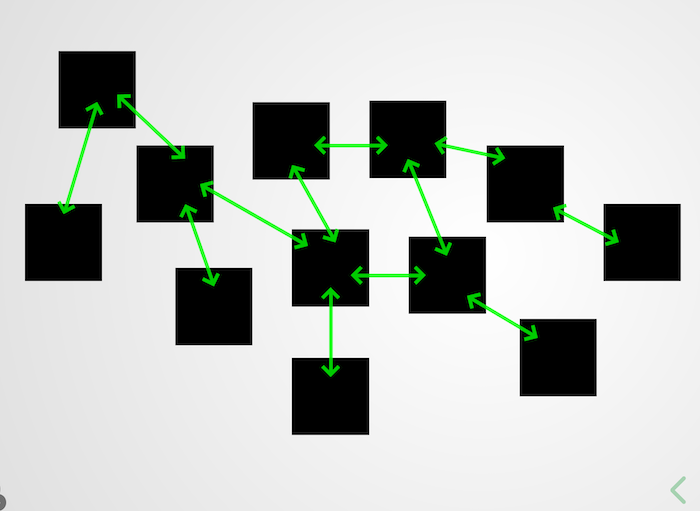

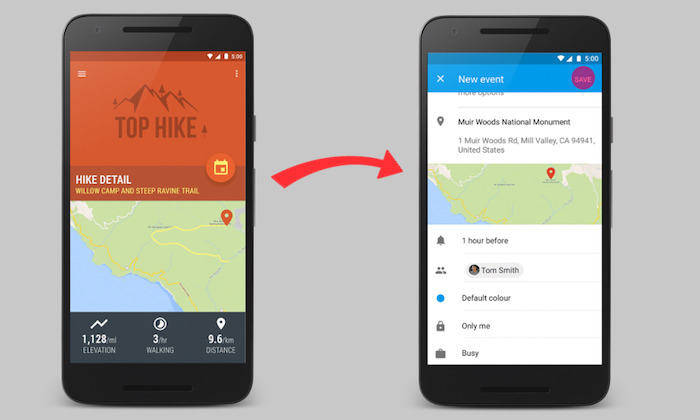

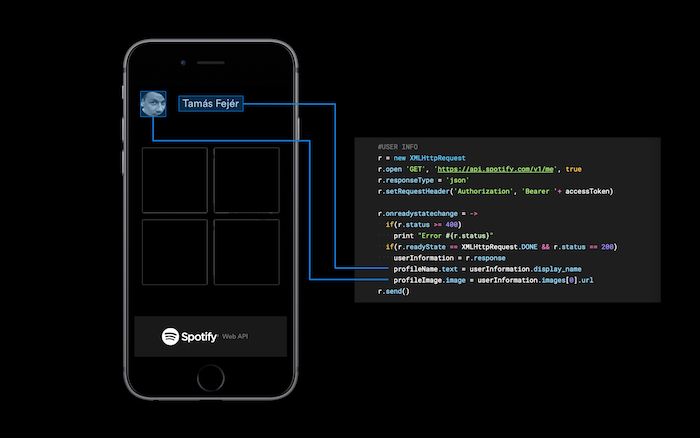

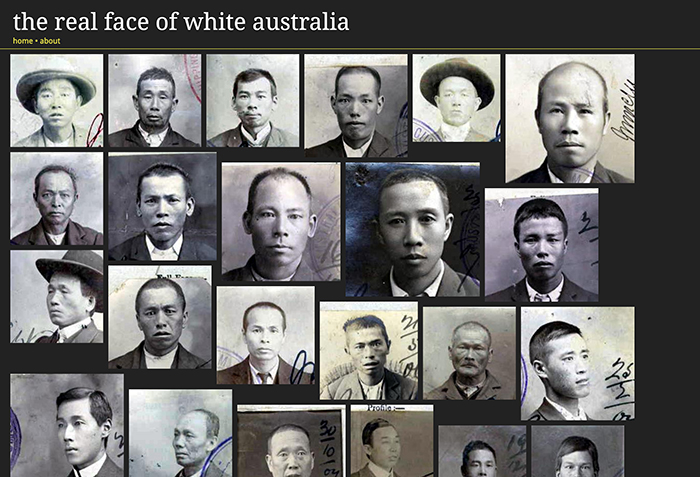

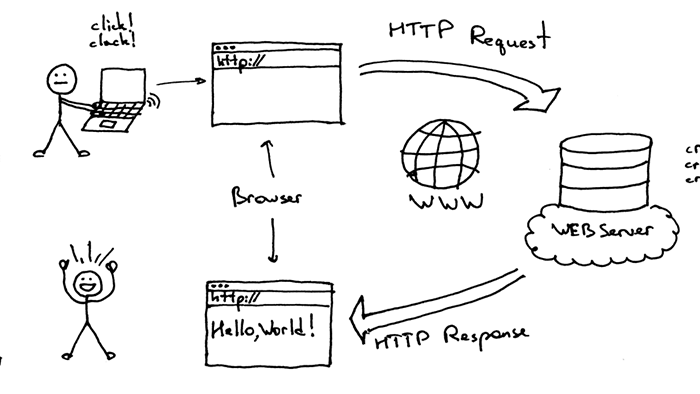

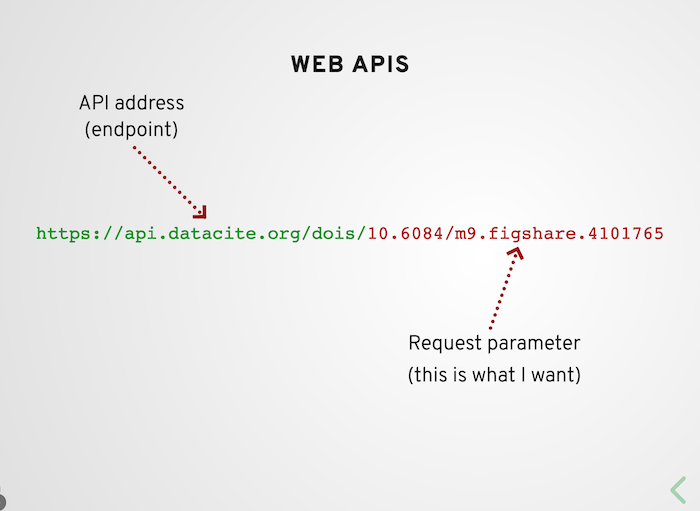

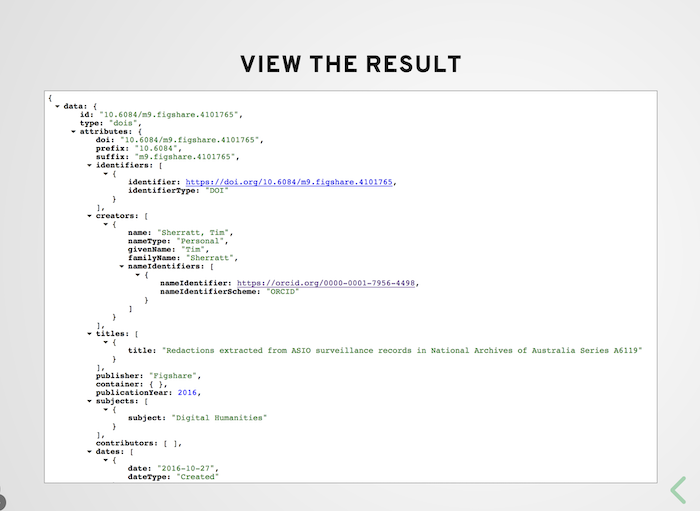

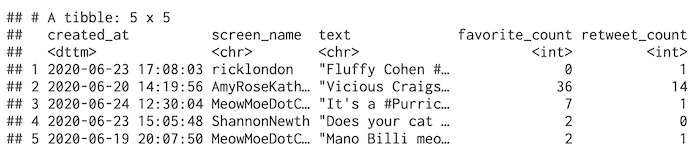

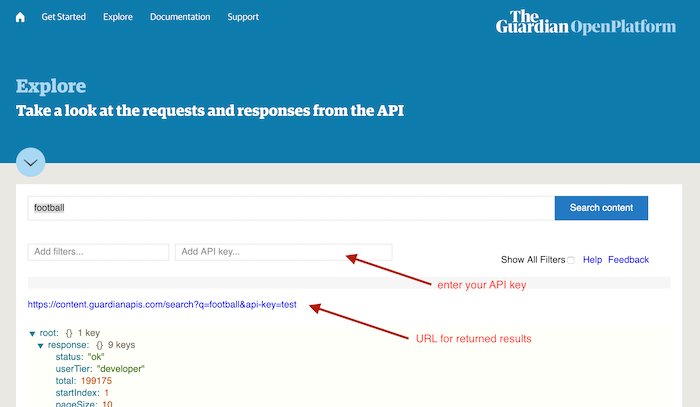

class: title-slide, top, left background-image: url(img/rc_apis_2020.jpg) background-size: contain --- class: center, middle .alert[ **Researchers from all corners of academia and the private and public sectors are collecting and analyzing textual data from online platforms, and they are using this data to answer questions about social attitudes, trends and fashions, consumer behavior, political deliberations, social interactions, interpersonal relationships, and many other social phenomena.** <br> *Text mining: A guidebook for the social sciences.* Gabe Ignatow and Raha Micalcea, 2017. ] ---  The web provides researchers with endless possibilities in areas such as **Machine learning, Natural language processing, Social network analysis, Topic modelling and Data Visualisation**. .footnote[ Source: http://www.sixdegreesoffrancisbacon.com ] --- ### Researcher workflow As data gathering from online sources for analysis has become more and more interesting for academic researchers, in practical terms the **typical workflow** for a researcher involves: -- * Getting the data * Cleaning the data * Analysing the data * Visualising the data. --- ### Overview The focus for this tutorial is the first stage of this process - **Getting the data**. -- <br> There are many ways to obtain data from the Internet. In the simplest cases the data already exists in a tabular format (such as an **Excel or CSV**) and is available to click-and-download. -- <br> Specifically, we’ll look at the conceptual basics of data collection through using ** APIs** (otherwise known as Application Programming Interface). --- ### Aim of this tutorial * Understand **what APIs are and how they work**. * Understand the **use and limitations of APIs for data collection**. * Learn some **basic methods to query APIs** to obtain data. --- class: center, middle # Textual analysis is a broad term for various research methods used to describe, interpret and understand texts. --- ### Text mining as a research method For researchers interested in internet-based methods, terms such as **Web scraping** and **Data Mining** are often used interchangeably to describe techniques to collect and organise **textual data** for analysis. -- <br> For our purpose we can distinguish that: * **Web scraping** refers to the process of automatically extracting textual data from web pages and other digital files (Ignatow & Mihalcea, 2018). * **APIs** provide data in a digital format that computers can understand and use (often referred to as *machine-readable* data). (Sherratt, 2019). --- class: center, middle # So what is an API? --- ### Software with no API  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/10 ] ??? This is a single copmputer. It has a job to do, and it does it well. It does it so well, that we want to connect it up to another application to build a workflow. --- ### The glue between different systems  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/15 ] ??? APIs are often described as the glue that sticks different computer systems together (Janetzko, 2016). Instead of building large monolithic programs, we can create complex applications by hooking together smaller, specialised programs. --- ### Build new tools & services  .footnote[ Source: https://developers.google.com/calendar ] --- ### Build new tools & services  .footnote[ Source: https://blog.prototypr.io/have-you-heard-about-the-spotify-web-api-8e8d1dac9eaf ] --- ### Build new tools & services  .footnote[ Source: http://invisibleaustralians.org/faces/ ] ??? The White Australia Policy was about people – people whose lives were monitored and restricted because of the colour of their skin. The records are held by the National Archives of Australia. --- class: center, middle # So how do they work? --- ### APIs use the underlying protocols of the web  .footnote[ Source: https://buildinformi.blogspot.com/2015/09/mobile-web-server-how-to-build-web.html ] ??? Request and Response. You make *queries* and you get back *results*. --- ### Send a requests to the API  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/13/0/3 ] ??? But instead of sending back a nicely formatted web page, they deliver data in a form that computers can understand. --- ### Instructions in the URL  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/18 ] ??? While humans can easily interpret information on a web page, computers need more help. --- ### Get back a Response  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/19 ] ??? The results are sent back as data in a standard format like XML or JSON. --- ### In summary <br> .instructions[ **Web sites are for humans** -> listen for urls and deliver nicely formatted web pages that humans can read.. ] <br> .instructions[ **Web APIs are for machines** -> listen for urls and deliver nicely *structured (or machine-readable) data*. (Sherratt, 2019). ] --- class: center, middle # Some issues to consider when using APIs in research --- ### Web scraping v APIs When using textual data extracted through web scraping or APIs there's some things to consider. In both cases the data provider can set restrictions on how their content can be accessed and used. -- <br> **Web scraping** tend to be more flexible as it can be used on most web pages. The flip side is that tools to scrape data from pages are often more difficult to use and may violate a platform’s **terms of service** (Freelon, 2018). --- ### Web scraping v APIs <br> <br> .instructions[ Websites can have a crawling policy that states which parts of their site can be crawled. The most commonly used one is robots.txt, which is a file that is placed at the root of the website (e.g., http://www.cnn.com/robots.txt) (Ignatow & Rada, 2017). ] --- ### Terms of Service (TOS) Most social media companies like **Facebook, Twitter and LinkedIn** require users to agree to a **terms of service agreement** before registering an account. -- <br> Generally this grants them permission to use the content you post on their site, and indemnifies them against any libelous or defamatory materials posted onto their platform. -- <br> These terms also include strict requirements for using their API services. APIs define the structure of the data that can be extracted and have policies for what you can and can’t do with the data you collect (Ignatow & Rada, 2017). --- ### Access & Rate limits Not all APIs work the same way and the use of data is strictly regulated by policies of the API provider (Janetzko, 2016). In April 2015 **Facebook** closed its public search API. Since then privacy concerns and the monetisation of data access has meant 'public' or 'open' APIs have disappeared or imposed greater restrictions, or commercial barriers. -- <br> APIs place certain limitations on how many requests you can make per minute, hour or day. The standard (free) **Twitter API** only allows you to collect tweets going over the past 10 days, and doesn’t provide mechanisms to work with historical tweets. --- ### Privacy / Research ethics It's worth remembering **ethical issues are a major consideration in any form of online research**. By employing TOS-compliant methods, you are respecting the business prerogatives of the company that created the platform you are studying but not necessarily the dignity and privacy of the platform’s users (Freelon, 2018). -- <br> .instructions[ **Twitter** allow scholars to harvest enormous data sets, it is important for us to consider the human effects of working with and representing people's statements out of context, particularly when the public nature of Twitter that makes the data available also makes individual tweets searchable and traceable (Stewart, 2018). ] ??? One of the most important purposes of **research ethics** is to protect research participants. Do not confuse TOS compliance with human subjects compliance or privacy protection. The question of whether public tweets are by default public data is an ethical issue that is contested. --- class: center, middle # APIs for data collection --- ### Getting started Collecting data through an APIs is only possible if the data collection programs are authorized to do so. There are a series of steps before you can start collecting data: -- * To begin you need to register a developer account with the provider you intend to use. * You then need to register the ‘apps’ you plan to create. * You then need to save the *access token* and *access key* that have been generated for your app. -- .instructions[ **Keys & Tokens** are long strings of numbers and letters you need to query and receive data back from the API. ] --- ### Using the Twitter API .midi[ .left-column[  ] .right-column[ * Apply for a **developer account** (https://developer.twitter.com/en/apply-for-access) <br> * Register your new app and save a copy of the **Keys and Tokens** (these will go into our R script) ] ] <br> <br> <br> <br> <br> <br> <br> .question[ **R** is one of the most popular *free* tools used for data-analytics as it contains a number of libraries for text-mining, data cleaning and manipulation and visualisations. ] --- ### /rtweet We're using the R package **rtweet** (https://rtweet.info): ```r # Install the packages we'll be using ------------------------------------- install.packages(c("tidyverse","tidytext","rtweet"), dep = TRUE) library(tidyverse) library(tidytext) library(rtweet) ``` Next we include the name of our app and the four access keys and tokens: ```r # Set the token to authenticate our app ----------------------------------- token <- create_token( app = "YOUR APP NAME", consumer_key = "YOUR API_KEY", consumer_secret = "YOUR API_SECRET_KEY", access_token = "YOUR ACCESS_TOKEN", access_secret = "YOUR ACCESS_TOKEN_SECRET",) ``` --- ### /rtweet ```r # Set-up the query for our tweets ----------------------------------------- tweets <- search_tweets(q = "#cats", # our query term (q) n = 18000, # max number of tweets include_rts = FALSE, # don't include retweets `-filter` = "replies", # don't include replies lang = "en") # tweets in English ``` --- ### /rtweet ```r # Check what's come back -------------------------------------------------- tweets %>% sample_n(5) %>% select(created_at, screen_name, text, favorite_count, retweet_count) ```  ```r # Save the results to a file ---------------------------------------------- write_as_csv(tweets, "cats-tweets-June2020.csv") ``` --- ### A more complex query .midi[ .left-column[  ] .right-column[ For the Twitter example we just ran a query for tweets that contained a single work ('cats’). Of course we can specify several parameters into our queries to help narrow and focus our search. Let’s look at The National Library of Australia's **Trove API** operates in order to build a more detailed set of criteria to focus our search request. ] ] --- ### Trove **Trove** is organised around multiple Zones. These zones include `book, picture, article, music, map, collection, newspaper, gazette, list, all`. -- <br> So a simple search request looks something like: ```r https://api.trove.nla.gov.au/v2/result?key=<INSERT KEY> &zone=<ZONE NAME> &q=<YOUR SEARCH TERM> ``` --- ### Trove We can narrow this down further by including **Facets** which are categories that restrict our interest to a specified area. The Facets include `place, format, decade, year, month, language, title, category`. -- <br> Here's how to limit the search to Theses (using the format facet): ```r https:// api.trove.nla.gov.au/v2/result?key=INSERT_KEY &zone=book &q=INSERT_SEARCH_TERMS &l-format=Thesis ``` --- ### How about a test run? Here's a workflow to try using the TROVE API: -- <br> .instructions[ * Get a TROVE API key (https://help.nla.gov.au/trove/building-with-trove/api). * Construct a simple, two term search query on the newspapers collection and limit the search to a specific number of records. * Use the site ‘JSON to CSV Converter’ (https://json-csv.com) to save the results to file. * Download the resulting CSV file. ] --- ### More Trove.. https://help.nla.gov.au/trove/trove-api-workshop https://help.nla.gov.au/trove/building-with-trove/api-version-2-technical-guide  --- class: center, middle # Where to next? --- ### Hook APIs together to create your own custom services  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/24 ] --- ### Test an API Console  .footnote[ Source: https://open-platform.theguardian.com/explore/ ] ??? TROVE and The Guardian provide methods to explore their APIs. --- ### Tim Sherratt’s GLAM Workbench  .footnote[ Source: https://slides.com/wragge/introducing-apis-vala#/28 ] --- ### Tools for Web crawling Web crawling can be effectively performed using any of these tools: * scrapy (http://scrapy.org) a collection of Python scripts * rvest (https://github.com/tidyverse/rvest) a collection of R scripts -- Other software: * Helium Scraper (http://www.heliumscraper.com) * Outwit (http://www.outwit.com) * Outwit Hub (https://www.outwit.com/products/hub) --- ### References * About Trove. The National Library of Australia. Retrieved from https://help.nla.gov.au/trove/using-trove/getting-to-know-us * API version 2 technical guide (TROVE). The National Library of Australia. Retrieved from https://help.nla.gov.au/trove/building-with-trove/api-version-2-technical-guide * Hegelich, Simon. (2018). R for Social Media Analysis. The SAGE Handbook of Social Media Research Methods. DOI: http://dx.doi.org/10.4135/9781473983847 * Janetzko, Dietmar. (2018) The Role of APIs in Data Sampling from Social Media. The SAGE Handbook of Social Media Research Methods. DOI: http://dx.doi.org/10.4135/9781473983847 * Practice getting data from the Twitter API. Retrieved from https://cfss.uchicago.edu/notes/twitter-api-practice/ --- # References * Sherratt, Tim. (2019). Introducing APIs. Zenodo. http://doi.org/10.5281/zenodo.3545007 * Sherratt, Tim. (2019). GLAM-Workbench/trove-api-intro (Version v0.1.0). Zenodo. http://doi.org/10.5281/zenodo.3549551 * Stewart, Bonnie. (2018). Twitter as Method: Using Twitter as a Tool to Conduct Research. The SAGE Handbook of Social Media Research Methods. DOI: http://dx.doi.org/10.4135/9781473983847 * Ignatow, Gabe & Mihalcea, Rada. (2017). Text Mining: A Guidebook for the Social Sciences. DOI: https://dx.doi.org/10.4135/9781483399782 * Wasser, Leah. (2018). Earth Analytics Course in the R Programming Language (Version r-earth-analytics). Zenodo. http://doi.org/10.5281/zenodo.1326873 ---